“We have great drone platforms…we just don’t have great reasons to fly them (yet)”

Our quest to build the world’s best hyperspectral data analytics service couldn’t exist without the right sensor feeding it. Since all the commercial hyperspectral options for the drone community are cost-prohibitive for most drone users, our only option was to build one; an affordable, highly capable, easy-to-use sensor to make aerial data services essential for any market where timely, critical decisions matter. So we jumped at an opportunity to use an US Air Force Commercial Solutions Opening (CSO) under the Air Force Research Lab (AFRL) AFWERX program to fund the development and build the sensor we wanted feeding our analysis addiction. Let us take you on a short trip through the process.

data analytics service couldn’t exist without the right sensor feeding it. Since all the commercial hyperspectral options for the drone community are cost-prohibitive for most drone users, our only option was to build one; an affordable, highly capable, easy-to-use sensor to make aerial data services essential for any market where timely, critical decisions matter. So we jumped at an opportunity to use an US Air Force Commercial Solutions Opening (CSO) under the Air Force Research Lab (AFRL) AFWERX program to fund the development and build the sensor we wanted feeding our analysis addiction. Let us take you on a short trip through the process.

Blending Data Analytics and Drone Versatility with Sensor Design.

Since the sensor’s host is a drone, small is better but small often means less-capable. We believed that the analytics could compensate/offset some of the traditional limitations for small sensors. Additionally, the flexibility of the drone platform to alter its flight gave us options to consider for increasing the sensor’s performance when integrated with the flight control. Finally, the intent of the sensor is mapping material classifications, not creating ortho maps. This gave us options to use smaller, cheaper components and use clever calculations to pinpoint materials on the ground.

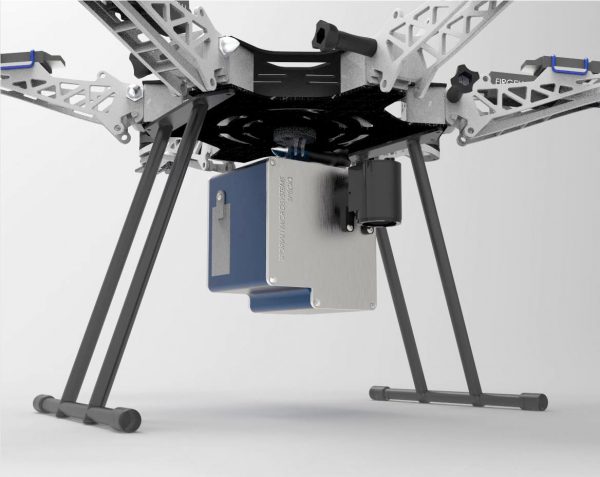

After many discussions with the sensor designer/builder, Sporian Microsystems, Inc, in Lafayette, Colorado, they developed and assembled the prototype used for testing. Weighing just 24 ounces and consuming only 12 volts made it suitable for multirotor systems and like other RGB and multispectral sensors on the market.

Key features of the design include:

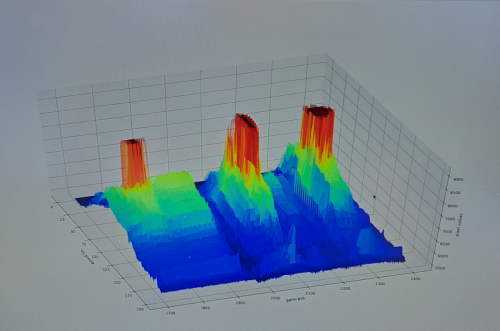

· An oscillating mirror generating the sensor Field of View (FOV) (think of a wisk-broom)

· A “binning” system based on the sensor’s data collection rate that generates “points” on the ground within the FOV

· Integrating two sensors to cover 405-1700nm of the electromagnetic spectrum (Visible to Shortwave Infrared)

· Ruggedized housing to handle dynamic drone environments

Challenges Along the Path

Most of the parts acquisition and assembly occurred right after the big Covid slowdown of parts manufacturing. Several key components, made by only a few manufacturers (mirrors, motors) had unpredictable long-lead times which caused delays in delivery of the sensor.

Most of the parts acquisition and assembly occurred right after the big Covid slowdown of parts manufacturing. Several key components, made by only a few manufacturers (mirrors, motors) had unpredictable long-lead times which caused delays in delivery of the sensor.

Because the data analytics were going to inform the sensors design improvements (and speed to market is a real thing!), we took delivery of the sensor minus a few bells and whistles. For example, aiming the sensor at a target 200-feet away with a 15-inch “spot size” with absolute certainty was challenging. We solved this with clever use of targeting panels and custom software able to “visualize” the RGB of our targets across the FOV.

Another significant challenge was determining where the sensor was looking in order to calculate the location on the ground of a material detection. We solved this by tapping into the drone’s flight control and reading the GPS and inertial measuring of the drone’s position relative to the ground. Additionally, we opted for a high-resolution RGB ride-along camera able to record pictures of the ground to add context to the data analytics for rapid operator confirmation of a target detection.

Finally, a design goal was to be agnostic to the drone platform of choice so a universal mounting system had to be developed that afforded easy mounting options to drone systems. We opted for a lightweight 3D printed mounting bracket made of carbon fiber for strength and rigidity. The top mounting plate can be attached to a rail system or bottom-mounted plates on the drone. This bracket also holds the sensors companion compute node and optional battery.

The next blog post will go more in-depth on data analytics and drone integration for autonomous operation. Flight testing was not part of the USAF contract and will be conducted in the coming months. Stay tuned!

UCCS Students and Faculty, lead by Dr. Adham Atyabi, helping build the Hyperspectral Sensor

Phase 1 Team (Sporian Microsystems and Spectrabotics) testing the prototype hyperspectral sensor at the Joint Interagency Field Experiment hosted by the Naval Post Graduate School, Paso Robles, CA

Recent Comments